notebook 3 - batch correction¶

This notebook will introduce you very briefly to the process of batch correction using another dataset extracted from Kotliarov et al. 2020 Nature Medicine:

Broad immune activation underlies shared set point signatures for vaccine responsiveness in healthy individuals and disease activity in patients with lupus (https://www.nature.com/articles/s41591-020-0769-8)

[1]:

#import necessary python packages

import scanpy as sc #software suite of tools for single-cell analysis in python

import besca as bc #internal BEDA package for single cell analysis

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

import numpy as np

import besca as bc

sc.logging.print_header() #output an overview of the software versions that are loaded

scanpy==1.9.1 anndata==0.8.0 umap==0.5.3 numpy==1.21.5 scipy==1.9.0 pandas==1.4.3 scikit-learn==1.1.1 statsmodels==0.13.2 python-igraph==0.9.11 pynndescent==0.5.4

[2]:

from IPython.display import HTML

task = "<style>div.task { background-color: #ffc299; border-color: #ff944d; border-left: 7px solid #ff944d; padding: 1em;} </style>"

HTML(task)

[2]:

[3]:

tag = "<style>div.tag { background-color: #99ccff; border-color: #1a8cff; border-left: 7px solid #1a8cff; padding: 1em;} </style>"

HTML(tag)

[3]:

[4]:

FAIR = "<style>div.fair { background-color: #d2f7ec; border-color: #d2f7ec; border-left: 7px solid #2fbc94; padding: 1em;} </style>"

HTML(FAIR)

[4]:

Dataset¶

Here we will reload the dataset we wrote out after performing the regressout to perform a batch correction on Donor.

[5]:

adata = bc.datasets.Kotliarov2020_processed()

[6]:

adata

[6]:

AnnData object with n_obs × n_vars = 47511 × 1271

obs: 'CELL', 'CONDITION', 'sample_type', 'donor', 'tenx_lane', 'cohort', 'batch', 'sampleid', 'timepoint', 'percent_mito', 'n_counts', 'n_genes', 'leiden', 'protein_leiden', 'leiden_r1.0', 'celltype0', 'celltype1', 'celltype2', 'celltype3', 'celltype_flowjo'

var: 'ENSEMBL', 'SYMBOL', 'feature_type', 'n_cells', 'total_counts', 'frac_reads'

uns: 'celltype0_colors', 'celltype1_colors', 'celltype2_colors', 'celltype3_colors', 'celltype_flowjo_colors', 'leiden', 'leiden_colors', 'leiden_r1.0_colors', 'neighbors', 'protein_leiden_colors', 'rank_genes_groups'

obsm: 'X_pca', 'X_umap', 'protein', 'protein_umap'

obsp: 'connectivities', 'distances'

Batch correction¶

After normalization, there could still be confounders in the data. Technical confounders (batch effects) can arise from difference in reagents, isolation methods, the lab/experimenter who performed the experiment, even which day/time the experiment was performed. Further factors like cell size, cell cycle phase , etc. can introduce unwanted variance in your data that may not be of biological interest. Various approaches exist that can account for and, ideally, remove technical confounders, this is called a batch correction. In general technical confounders can only be removed if the batch effect is orthogonal to the biological differences in each batch! In cases were this is not the case applying a batch correction does not make sense since you will never be able to differentiate if the result of significant differences are due to the batch or true biological differences.

Here we will discuss a batch correction based on the Batch balanced KNN (in python bbknn) since this is the one we most commonly use, but there are many other approaches as well.

[7]:

adata.obs.batch.value_counts()

[7]:

1 24593

2 22918

Name: batch, dtype: int64

[8]:

#rename the Donors

adata.obs.batch = adata.obs.batch.to_frame().replace({1:'batch_1' , 2:'batch_2'}).batch

perform the dataprocessing pipeline¶

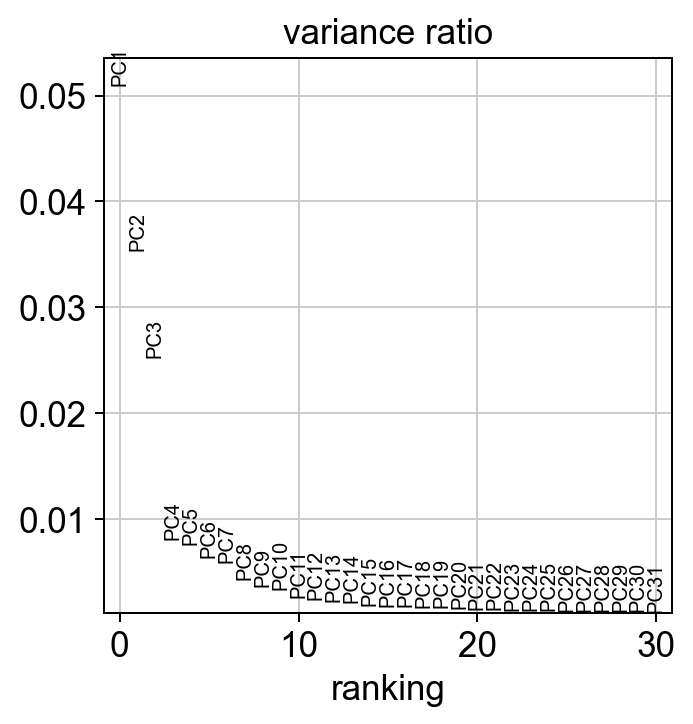

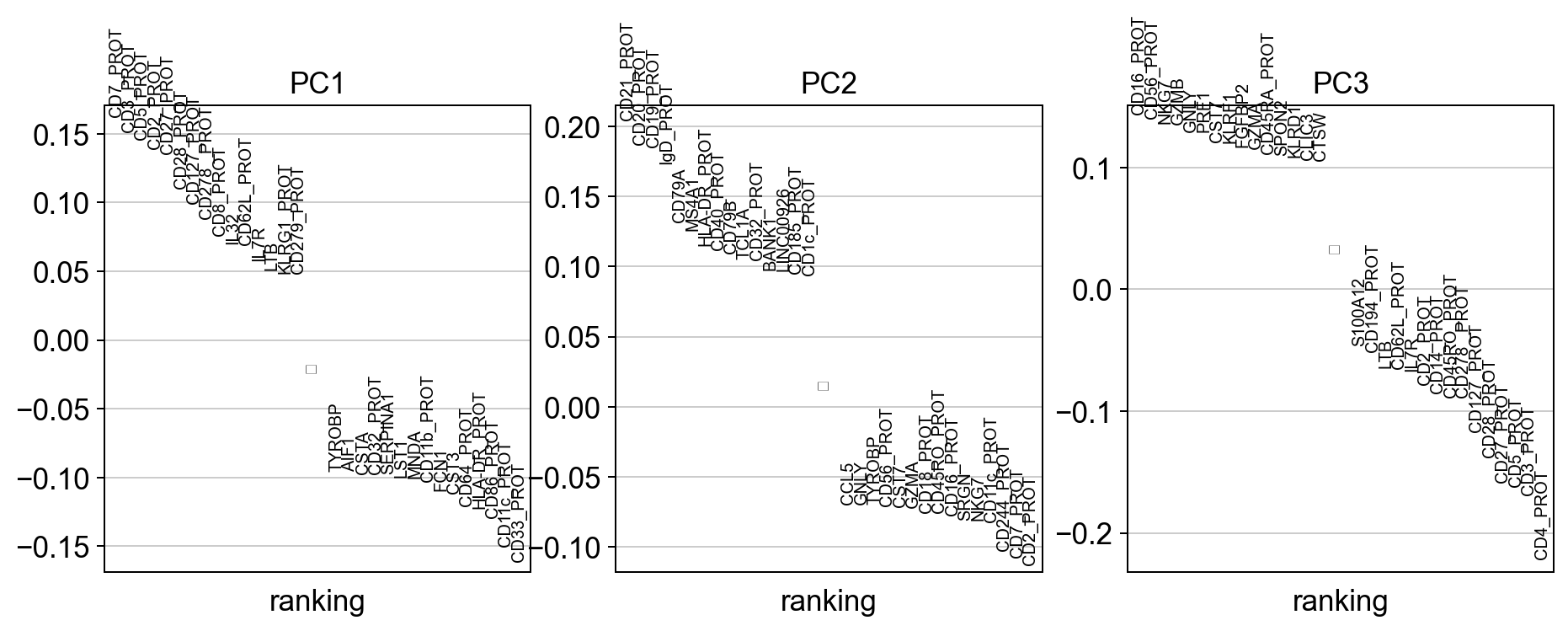

principle component analysis¶

[9]:

#define random seed

random_seed = 0

sc.settings.set_figure_params(dpi=90)

#calculate 50 principle components of the dataset

sc.tl.pca(adata, random_state=random_seed, svd_solver='arpack')

#visualize the amount of variance explained by each PC

sc.pl.pca_variance_ratio(adata)

#visualize the loadings onto the first 3 PCs

sc.pl.pca_loadings(adata)

[10]:

adata_uncorrected = adata.copy()

import bbknn

bbknn.bbknn(adata, batch_key='batch') #perform alternative batch correction method

#sc.pp.neighbors(adata, n_neighbors=15, random_state = random_seed, n_pcs=50, )

Leiden clustering¶

[11]:

sc.tl.leiden(adata, random_state=random_seed)

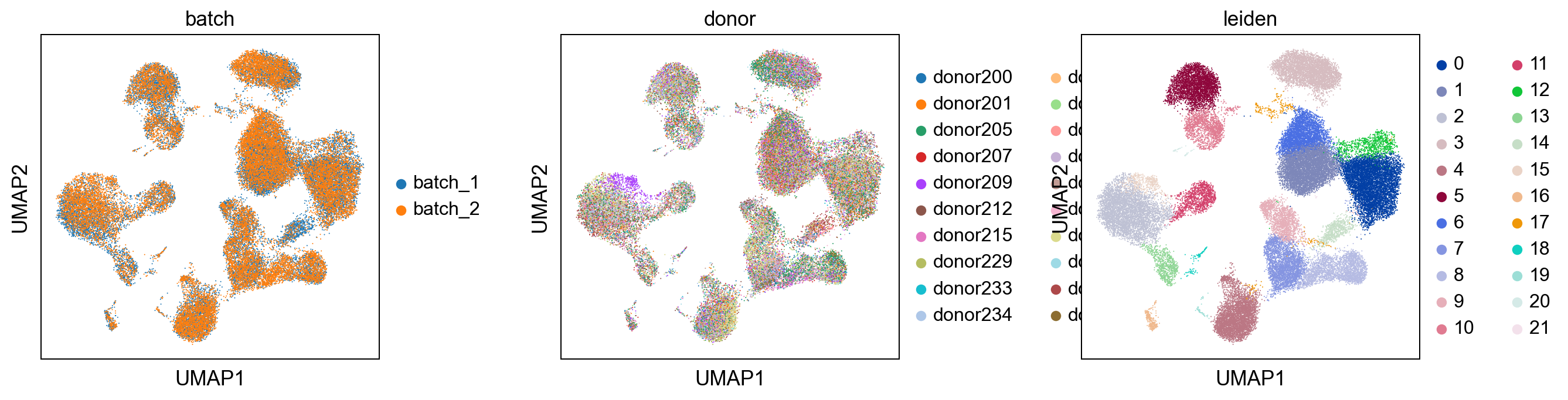

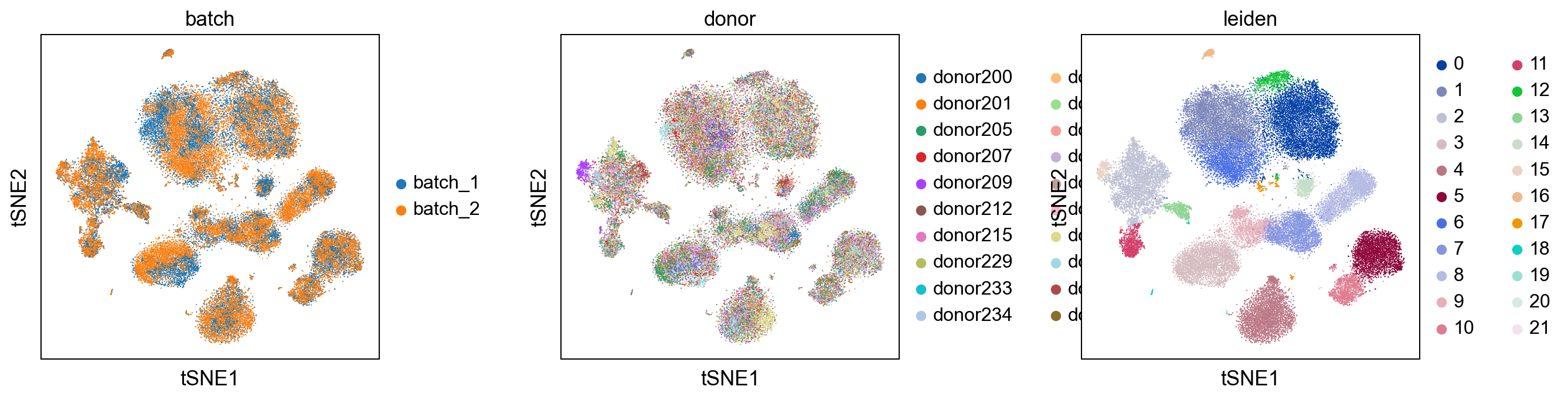

UMAP & t-SNE¶

[12]:

%%time

sc.tl.umap(adata, random_state = random_seed)

CPU times: user 24.6 s, sys: 2.69 s, total: 27.3 s

Wall time: 14.5 s

[13]:

%%time

sc.tl.tsne(adata, random_state = random_seed)

CPU times: user 14min 15s, sys: 3min 3s, total: 17min 19s

Wall time: 2min 47s

[14]:

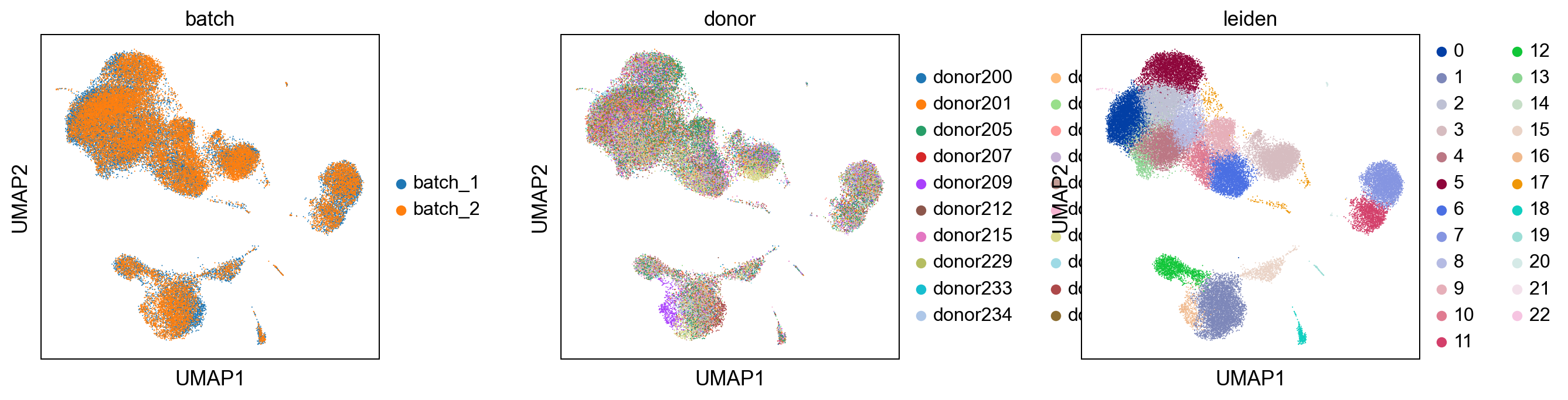

sc.pl.umap(adata, color = ['batch', 'donor', 'leiden'], wspace = 0.4)

sc.pl.tsne(adata, color = ['batch', 'donor', 'leiden'], wspace = 0.4)

Comparision with uncorrected dataset¶

[15]:

#read in uncorrected dataset

sc.pp.neighbors(adata_uncorrected, n_neighbors=15, random_state = random_seed, n_pcs=50)

[16]:

##comparision of louvain clustering with unbatchcorrected dataset

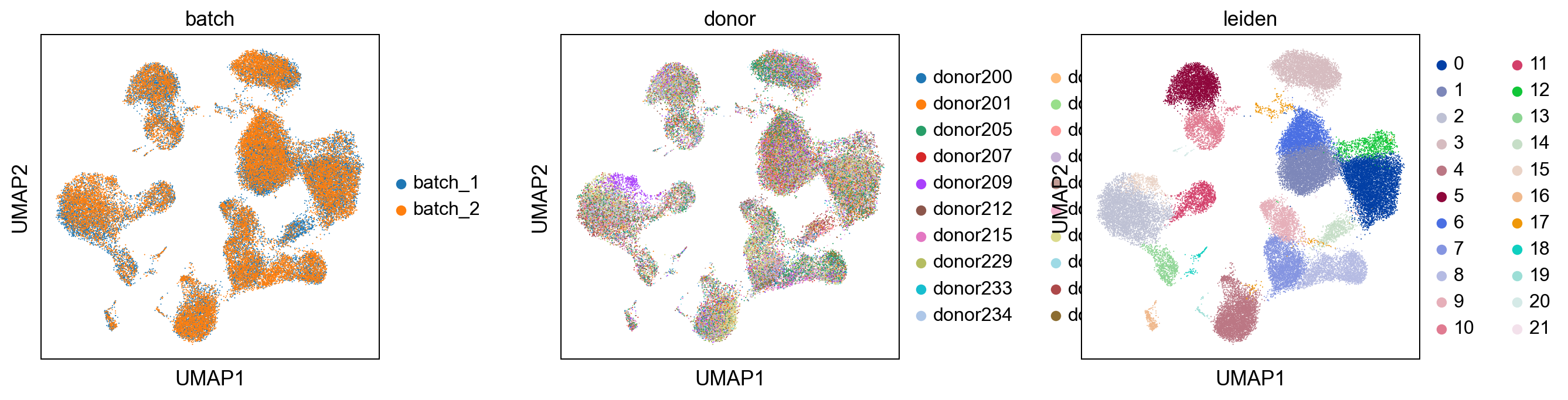

print('uncorrected')

sc.pl.umap(adata_uncorrected, color = ['batch' , 'donor', 'leiden'], wspace = 0.4)

print('corrected')

sc.pl.umap(adata, color = ['batch' , 'donor', 'leiden'], wspace = 0.4)

uncorrected

corrected

[17]:

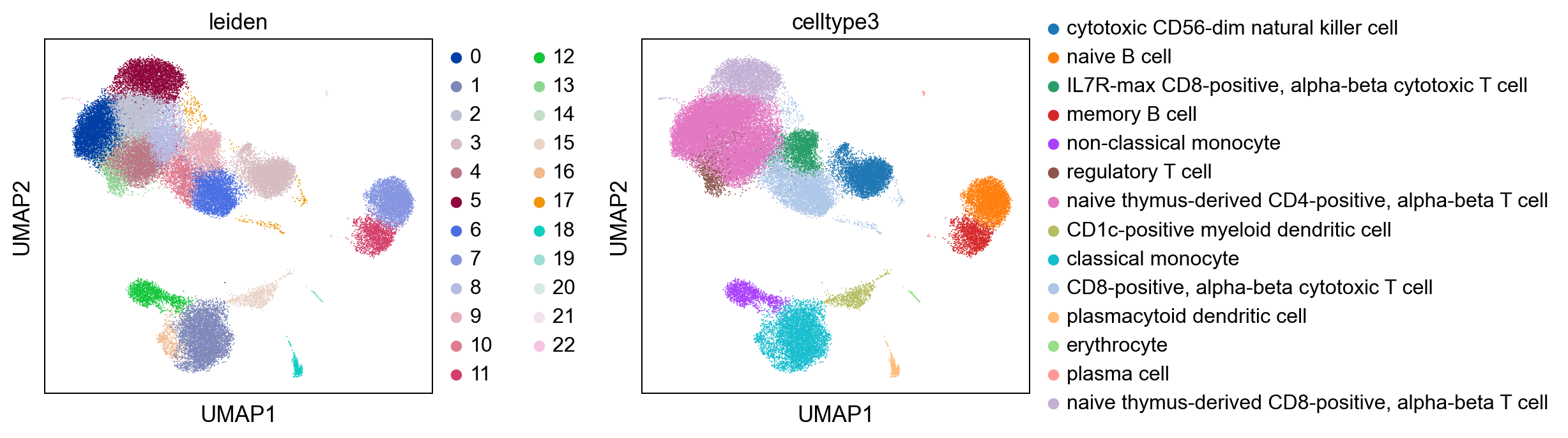

print('uncorrected')

sc.pl.umap(adata_uncorrected, color = ['leiden', 'celltype3'], wspace = 0.4)

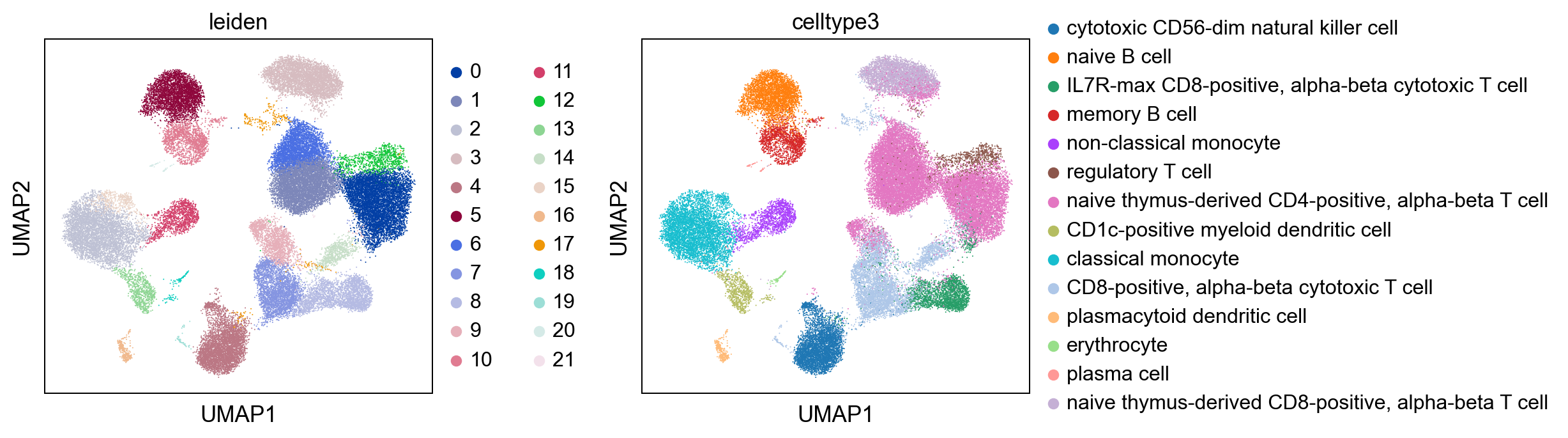

print('corrected')

sc.pl.umap(adata, color = ['leiden', 'celltype3'], wspace = 0.4)

uncorrected

corrected

[ ]: